By Eden Watt, Vice President, Application Innovation, Able-One Systems

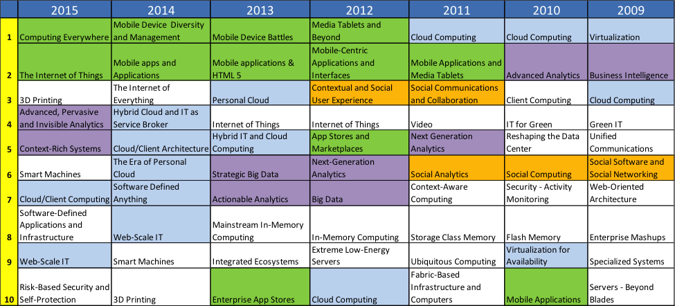

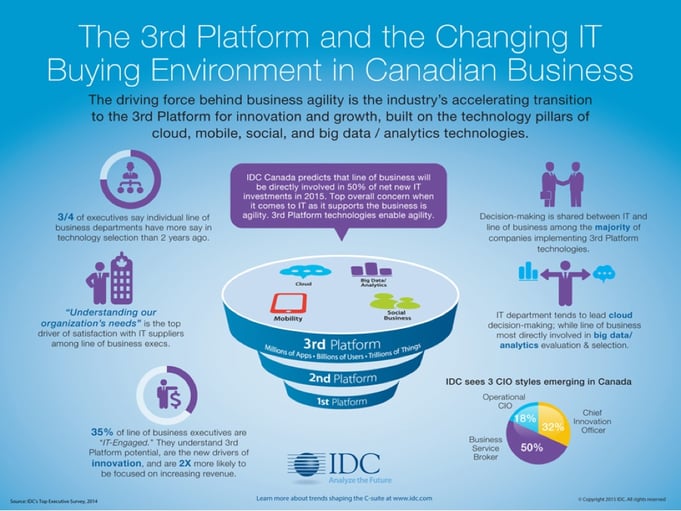

Gartner, IDC and other industry analysts produce compelling research which drives organizations towards technology innovations which transform business. A key concept in this research involves what they call the Third Platform, also known as SMAC (Social, Mobile, Analytics, Cloud), which is defined as consisting of the inter-dependencies between mobile computing, social media, cloud computing, big data/analytics, and now the Internet of Things (IoT).

According to this terminology, the first platform refers to mainframes, which began in the 1950’s and continue today, for example with IBM i, z, p servers. The second platform represents the client/server architecture, which became popular in the 90’s and also continues today with desktop applications interfacing with back-end servers (including ‘mainframes’).

Figure 1 below is a graphical representation of the Third Platform, from IDC. For a great summary of this material, including videos, check out http://www.idc.com/prodserv/3rd-platform.

It’s important to note that if your platform is considered ‘first’, this doesn’t necessarily mean it needs to go or be replaced, however, it’s important to consider how one incorporates newer capabilities and technologies with existing applications and infrastructures. This leads us to the ever popular topic of modernization.

Modernize means to enhance something to make it current. Some organizations may not consider their projects to be ‘modernization’ projects but if you’ve extended your enterprise systems to enable mobile or web interfaces, perhaps enabling customers to order online, perform web inquiries, fill out e-forms… or used web services to automate business processes or interfaces between systems… or upgraded your DB2/400 database to SQL… or mined your data sources to perform business intelligence or advanced analytics… or provided more advanced tools for your developers… or re-engineered business processes to reduce manual steps or increase automation… well, you get the idea. If you have enhanced your business applications and application management in any of these areas, then this is typically considered a modernization project.

Some object to the term because it implies that what you’re starting with is old or out of date. And we typically refer to modernization of ‘first platform’ systems as legacy systems or some, who do not want to offend, have called them heritage systems. The bottom line is that if the core of the application, including the user interface design, database, and application code was developed in the 90’s or earlier, then it fits in this category. It’s not a bad thing and it doesn’t mean that it hasn’t served the business well, both in the past and today, however, it’s probably a safe bet that the original architecture was not designed to take advantage of current technologies in the “third platform” which is important to remain competitive and support your business goals.

The specific business drivers for a modernization project can be varied, ranging from the need to deliver applications to customers and partners, integrate applications after acquisitions or line of business changes, reduce manual, paper-based or redundant processes, deliver operational efficiencies and workflow improvements, streamline, automate, assist executives in analyzing business trends, enable roaming employees to do their jobs from mobile devices and so on.

The technology evolution we’ve seen in the 2000’s is leading to, “Nothing less than the reinvention and continuous transformation of every industry in the world,” according to IDC’s Chief Research Officer, Crawford del Prete.

So, whether you are reacting to a specific, upcoming business challenge and related modernization project or are working with your organization to plot a strategic direction for the future, IT leadership is critical to business success in this Third Platform Era.

On December 2, 2015, we’re hosting an event for Application Managers to collaborate on the latest strategies and technologies for modernization on IBM i.

Modernization has been stated as a top concern with organizations, particularly those with mission-critical applications on IBM i servers, in numerous studies. IBM i has a solid reputation in areas such as low cost of ownership, power, scalability, reliability, and security but because of its initial, widespread growth in the 1990’s via software applications developed in that timeframe, many organizations today are working with systems that may need to be updated or extended to take advantage of current technologies.

The key goal of any modernization project must be to leverage your investment in key enterprise systems by extending them to align with current business goals and technology innovations.

The IBM Power Systems running the IBM i operating system, previously known as AS/400 or iSeries, can continue to be a strategic platform to operate your business for years to come but we believe ongoing application modernization, extensions, and/or retooling are key to ensuring that the rest of your organization believes this too.

However, modernization is used to describe a wide range of tactics and strategies and not every approach makes sense for every organization. We will continue to share customer stories, the latest technologies and strategies on this blog, at our events, and in our dealings with our customers. If this is of interest to you, we’d love to see you at our event which we plan to make both informative and collaborative so you can determine what make sense for your business.

To explore options for modernizing your applications, join us in-person for a special event on Wednesday December 2, 2015 from 12 PM to 3 PM in Toronto. Register today.

By Chuck Luttor, IBM i Software Developer at Able-One Systems inc.

By Chuck Luttor, IBM i Software Developer at Able-One Systems inc.